- Principal Investigator:

- Santani Teng

Participate in our studies! Visit Teng Lab Experiment Participation for details.

Welcome to the Cognition, Action, and Neural Dynamics Laboratory

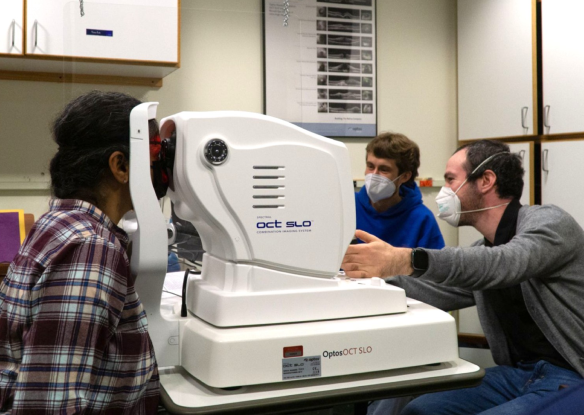

We aim to better understand how people perceive, interact with, and move through the world, especially when vision is unavailable. To this end, the lab studies perception and sensory processing in multiple sensory modalities, with particular interests in echolocation and braille reading in blind persons. We are also interested in mobility and navigation, including assistive technology using nonvisual cues. These are wide-ranging topics, which we approach using a combination of psychophysical, neurophysiological, engineering, and computational tools.

Spatial Perception and Navigation

It's critically important to have a sense of the space around us — nearby objects, obstacles, people, walls, pathways, hazards, and so on. How do we convert sensory information into that perceptual space, especially when augmenting or replacing vision? How is this information applied when actually navigating to a destination? One cue to the surrounding environment is acoustic reverberation. Sounds reflect off thousands of nearby and distant surfaces, blending together to create a signature of the size, shape, and contents of a scene. Previous research shows that our auditory systems separate reverberant backgrounds from direct sounds, guided by internal statistical models of real-world acoustics. We continue this work by asking, for example, when reverberant scene analysis develops and how it is affected by blindness.

Echolocation, Braille, and Active Sensing

Sometimes, the surrounding world is too dark and silent for typical vision and hearing. This is true in deep caves, for example, or in murky water where little light penetrates. Animals living in these environments often have the ability to echolocate: They make sounds and listen for their reflections. Like turning on a flashlight in a dark room, echolocation is a way to illuminate objects and spaces actively using sound. Using tongue clicks, cane taps or footfalls, some blind humans have demonstrated an ability to use echolocation (also called sonar, or biosonar in living organisms) to sense, interact with, and move around the world. What information does echolocation afford its practitioners? What are the factors contributing to learning it? How broadly is it accessible to blind, and sighted, people?

Neural Dynamics and Crossmodal Plasticity

The visual cortex does not fall silent in blindness. About half the human neocortex is thought to be devoted to visual processing. However, in blind people, who are deprived of the visual input that drives those brain regions, those areas become responsive to auditory, tactile, and other nonvisual tasks, a phenomenon called crossmodal plasticity. What kinds of computations take place under these circumstances? How do crossmodally recruited brain regions represent information? To what extent are the connections mediating this plasticity present in sighted people as well? These and many other basic questions remain debated despite decades of crossmodal plasticity research.

Want to join the lab or participate in a study?

Check here for details on any current funded or volunteer opportunities.

For inquiries, email the lab PI at santani@ski.org