Reaching with Central Field Loss

Parent R01

Eye-Hand coordination in Central Field Loss

Eye-hand coordination in AMD

Strategies for Efficient Visual Information Gathering

Active visual search

Target Selection in the Real World

Attention and Segmentation

Display Reader

The goal of the Display Reader project is to develop a computer vision system that runs on smartphones and tablets to enable blind and visually impaired persons to read appliance displays. Such displays are found on an increasing array of appliances such as microwave ovens, thermostats and home medical devices.

for Display Reader software download.

CamIO

CamIO (short for “Camera Input-Output”) is a system to make physical objects (such as documents, maps, devices and 3D models) accessible to blind and visually impaired persons, by providing real-time audio feedback in response to the location on an object that the user is touching. CamIO currently works on iOS using the built-in camera and an inexpensive hand-held stylus, made out of materials such as 3D-printed plastic, paper or wood.

See a short video demonstration of CamIO here , showing how the user can trigger audio labels by pointing a stylus at “hotspots” on a 3D map of a playground. See…

BLaDE

BLaDE (Barcode Localization and Decoding Engine) is an Android smartphone app designed to enable a blind or visually impaired user find and read product barcodes. The primary innovation of BLaDE, relative to most commercially available smartphone apps for reading barcodes, is that it provides real-time audio feedback to help visually impaired users find a barcode, which is a prerequisite to being able to read it.

Link to BLaDE software download: http://legacy.ski.org/Rehab/Coughlan_lab/BLaDE/

Click here for YouTube video demo of BLaDE in action.

Crosswatch

Crosswatch is a smartphone-based system developed for providing real-time guidance to blind and visually impaired travelers at traffic intersections. Using the smartphone’s built-in camera and other sensors, Crosswatch is designed to tell blind and visually impaired travelers what kind of traffic intersection they are near, how to align themselves properly to the crosswalk, and when the walk light or other traffic light indicates it is time to cross.

Click here for link to zip file containing Crosswatch code (open source) and documentation.

Go and Nogo Decision Making

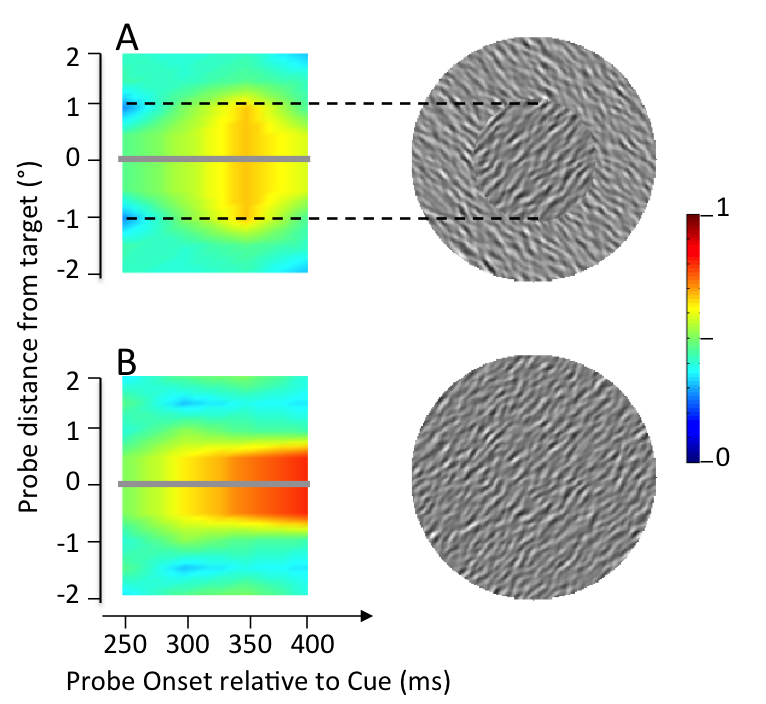

The decision to make or withhold a saccade has been studied extensively using a go-nogo paradigm, but little is known about the decision process underlying pursuit of moving objects. Prevailing models describe pursuit as a feedback system that responds reactively to a moving stimulus. However, situations often arise in which it is disadvantageous to pursue, and humans can decide not to pursue an object just because it moves. This project explores mechanisms underlying the decision to pursue or maintain fixation. Our paradigm, ocular baseball, involves a target that moves from the periphery…

Integration and Segregation

Traditionally, smooth pursuit research has explored how eye movements are generated to follow small, isolated targets that fit within the fovea. Objects in a natural scene, however, are often larger and extend to peripheral retina. They also have components that move in different directions or at different speeds (e.g., wings, legs). To generate a single velocity command for smooth pursuit, motion information from the components must be integrated. Simultaneously, it may be necessary to attend to features of the object while pursuing it.