Event Date:

Thursday, December 8th, 2022 – 12:00 PM to 1:00 PM

Speaker:

Abigale Stangl is a research scientist working at the intersection of human-computer interaction, accessibility, creativity, privacy, and computer vision

Host:

Senior Scientist - Coughlan Lab Director, James Coughlan

Abstract

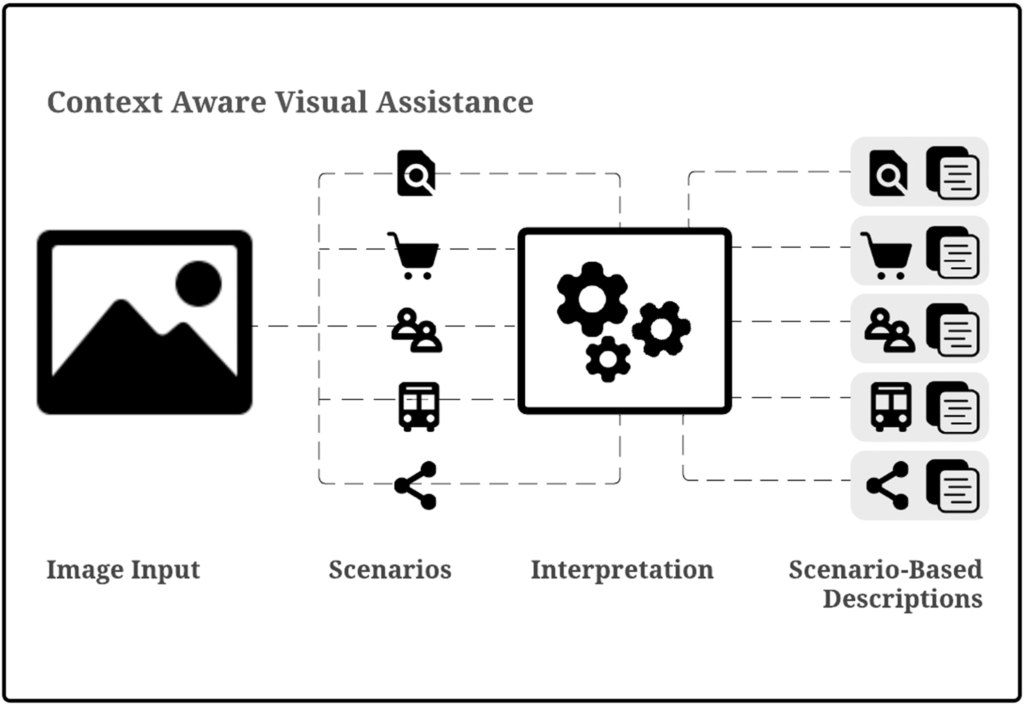

Image descriptions, audio descriptions, and tactile media provide non-visual access to the information contained in visual media. As intelligent systems are increasingly developed to provide non-visual access, questions about the accuracy of these systems arise. In this talk, I will present my efforts to involve people who are blind in the development of information taxonomies and annotated datasets towards more accurate and context-aware visual assistance technologies and tactile media interfaces.